-

Posts

2,195 -

Joined

-

Last visited

Content Type

Forums

Calendar

Gallery

Posts posted by L'Ancien Regime

-

-

-

I just pulled the trigger on that G. Skill RAM. No regrets. Some guys blow $60k restoring some car. It's not all just dollars and cents; there's an aesthetic there. I'm 65. I'm going to be dead soon. Lots of my friends are kicking the bucket or wish they had kicked the bucket after a bad hip replacement surgery left them limping around in constant pain. Then there's the sorrow of not being 18 with a 16 year old girlfriend. But that's not me. I'm going to get this rig and do it right. It may very well be my last rig, my best toy ever.

When I bought my new house my next rig was at the center of my ideas. It involved running three 4k monitors, 30"+

This is how it's done. A girlfriend is just going to take my house and bank account and pensions and leave me sleeping under a freeway overpass. I'll have to have a knife fight with some evil bums over who gets to sleep on the abandoned sofa.

I feel sorry for Al though. That desk sucks. I studied the problem of a desk that can accommodate a 3 x 32" 4k monitor setup like this and came up with the ultimate solution; Restoration Hardware's Monastery Table, (AKA Partner Desk, Library Table), 4' x 10'. Even while I was sleeping in the parking lot waiting to take possession of my house, I was planning its purchase. It looked like it would be $6000 for the table/desk and $1000 for delivery. Then RH sent me an email on their spring sale. I nailed that table for $3750 and $250 delivery.

This guy built one and it's nowhere near as nice as mine and he regrets not charging $11k USD for it.

My only regret in this build is that it can't be the 2990WX; there's just too many problems with that thing. It's a mangled Epyc and the performance just doesn't match the extra cost.

As for the RAM, Wendell at Level1 had this to say;

https://forum.level1techs.com/t/picking-out-the-right-ram-for-2950x-shouldn-t-be-this-hard/132123/12

"See the video we did on 128gb ecc vs non ecc. Extensive benchmarking there. Some surprising results like 128gb of 2933 is faster for most, but not all, things vs 3200 32gb"

Also have a look at this; $999 for a Pro Radeon that's nowhere near the GPU that $699 Radeon VII is.

-

2 minutes ago, AbnRanger said:

That's fine. I'm just telling you that the GSkill Trident Z 3200 model I use works great on my TR 1950X and my Motherboard has it listed as compatible.

I hear ya and I appreciate it but I'm all about risk avoidance.

-

1 hour ago, AbnRanger said:

You can check your Motherboard's list of compatible RAM. Just grab 4 sticks of the one's I showed you (GSkill Trident Z 3200). They aren't AMD or Intel specific. If you see Intel or AMD in the description, it just means they have been certified to work with those CPU's. This RAM works just fine, at 2933Mhz.

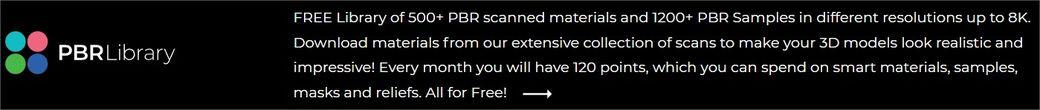

No, I'm going with the exact X399 recommended RAM. Why? Because if they don't work properly try getting a refund on used RAM. $880 is a lot of money to risk on it. At this point I'll be going with the g. skill Flare x x399 2933MHz if the 3200 or 3600MHz don't show up. I've been closely following this matter on Level1 Forums and I'm going with Wendell's dictates on this one. There's been a lot of problems reported in this area with the 2950X and the 2990WX and RAM compatibility. I suspect it has something to do with the way the CPU is set up with those 4 x 4 core 7nm chiplets with their 14nm I/O die and their memory controller.

-

23 minutes ago, AbnRanger said:

Did you find a Dark Rock Pro TR4 Cooler, yet? This one on Newegg ships from Germany, but I ordered mine from the EU, as well (Aqua Tuning), and standard priority shipping had it arrive within a week, easily.

I haven't gone shopping for that yet; next on the agenda is RAM. 64 gigs in 4 sticks of 16GBs. The best I can find is the X399 specific G.Skill 2933MHZ Flare X. The 3200 MHZ and 3600MHZ are sold out.

-

Just now, AbnRanger said:

Definitely a good one, but I think you could have gotten a good 80Plus Gold PSU from a reputable company. Thermaltake, CoolerMaster, Antec, Corsair, Be Quiet, and EVGA are all good brands. This 1300W EVGA model is 80 Plus Gold and about $250

https://www.newegg.com/Product/Product.aspx?Item=N82E16817438011&ignorebbr=1

Yeah but that's $344 Cdn. That's an extra $64 for another 100W. Remember, I'm buying all this stuff with worthless Canadian pesos as I slowly suffocate under a mountain of snow.

-

2 hours ago, haikalle said:

@L'Ancien Regime Let us know when you get new radeon card how it performs with 3d-coat. I might jump to amd boat if it has a good perform in 3d apps overall.

I just grabbed this; it had the best reviews of all the 1200W PSUs on Amazon and NewEgg and I found one last new one in Vancouver for $280.00 Cdn. It's got 6 four pin 75V VGA sockets on it so I could feasably run 3 x Radeon VII cards with it. I may get a second GPU. Not sure right now. Considering that other PSUs rated this highly were over $500 I figure this is a good price point.

Auto Undervolt in the AMD Radeon Settings interface effectively deals with excessive fan noise.

-

2 hours ago, digman said:

Not quite back yet. I had a break in my schedule and of course I got my favorite software to play with and still like answering questions..

Atm I am using an ancient laptop. I can do a little in the 32 bit version of 4.8.10. I am very limited but I can at least mess about.

Running windows 10 32 bit.

I will be in and out in the forums has time allows.

Gimmie a call sometimes

-

There are risks on both sides. If 2000 series weren't dying all the time I'd have gone with two 2070's months ago. But here we are and I can no longer delay. Making a choice means having to come to terms with the shortcomings of both platforms. Space invaders or a noisy fan, RTX or 16GB of HBM2 VRAM and pro card drivers. Even now just finding the RAM I want at 3600MHz by G. Skill is impossible; X399 specific sticks are almost all out of stock save for the 2933 16GB sticks. Getting the PSU I want is hard too. I've got to get this thing built though. I've waited long enough.

-

Yessss...

https://wccftech.com/amd-radeon-vii-graphics-card-radeon-pro-software-enterprise-support/

All the reviewers are dumping on it but that didn't stop it from being sold out on day one. At $699 I wonder why that was? kek.

-

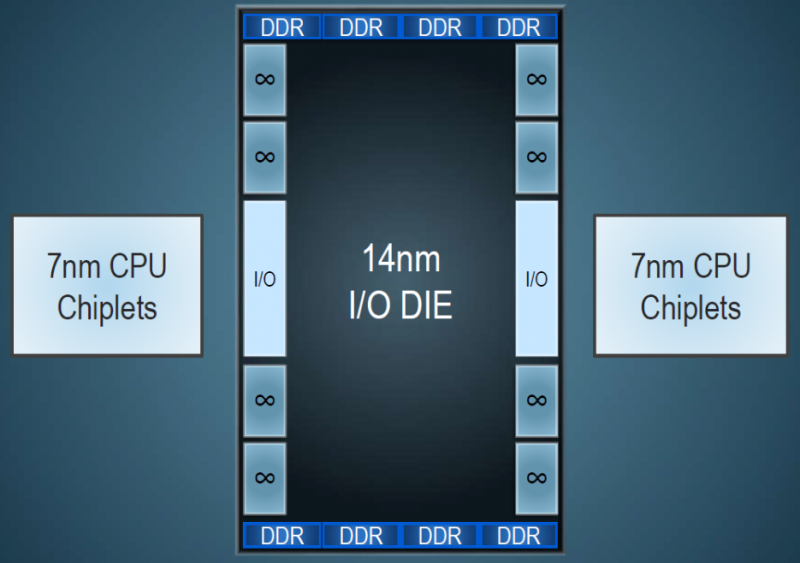

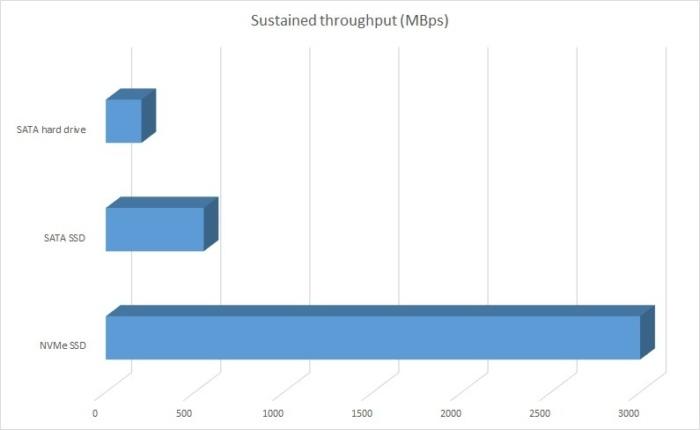

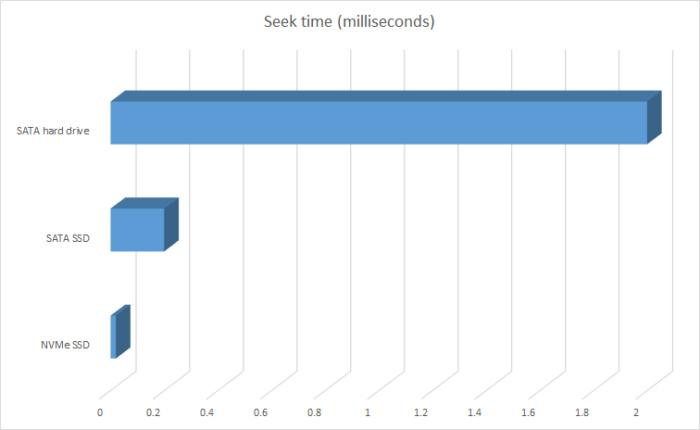

Maybe you already know all this; I knew that the M2 was a great new form factor but I'm just realizing how insanely fast it is.

https://www.pcworld.com/article/2899351/storage/everything-you-need-to-know-about-nvme.html

There’s a reason why we still have SATA SSDs and NVMe SSDs. Knowing the potential of memory-based SSDs, it was clear that a new bus and protocol would eventually be needed. But the first SSDs were relatively slow, so it proved far more convenient to use the existing SATA storage infrastructure.

Though the SATA bus has evolved to 16Gbps as of version 3.3, nearly all commercial implementations remain 6Gbps (roughly 550MBps after overhead). Even version 3.3 is far slower slower than what today’s SSD technology is capable of, especially in RAID configurations.

For the next step, it was decided to leverage a much higher-bandwidth bus technology that was also already in place—PCI Express, or PCIe. PCIe is the underlying data transport layer for graphics and other add-in cards. As of gen 3.x, it offers multiple lanes (up to 16 in most PCs) that handle darn near 1GBps each (985MBps).

PCIe is also the foundation for the Thunderbolt interface, which is starting to pay dividends with external graphics cards for gaming, as well as external NVMe storage, which is nearly as fast as internal NVMe. Intel’s refusal to let Thunderbolt die was a very good thing, as many users are starting to discover.

Of course, PCIe storage predates NVMe by quite a few years. But previous solutions were hamstrung by older data transfer protocols such as SATA, SCSI, and AHCI, which were all developed when the hard drive was still the apex of storage technology. NVMe removes their constraints by offering low-latency commands, and multiple queues—up to 64K of them. The latter is particularly effective because data is written to SSDs in shotgun fashion, scattered about the chips and blocks, rather than contiguously in circles as on a hard drive.

The NVMe standard has continued to evolve to the present version 1.31 with the addition of such features as the ability to use part of your computer’s system memory as a cache. We’ve already seen it with the supercheap Toshiba RC100 we recently reviewed, which forgoes that onboard DRAM cache that most NVMe drives use, but still performs well enough to give your system that NVMe kick (for everyday chores).

This ASRock Fatality X399 motherboard has three slots for the M2.

https://www.vuugo.com/asrock-motherboards-FATAL1TY-X399-PROFESSIONAL-GAMING.html

- 2 x Ultra M.2 Sockets (M2_1 and M2_2), support M Key type 2242/2260/2280 M.2 SATA3 6.0 Gb/s module and M.2 PCI Express module up to Gen3 x4 (32 Gb/s)*

- 1 x Ultra M.2 Socket (M2_3), supports M Key type 2230/2242/2260/2280 M.2 SATA3 6.0 Gb/s module and M.2 PCI Express module up to Gen3 x4 (32 Gb/s)* -

It was possible to recreate node networks in ICE and then replicate them in Houdini and vice versa, more or less.

Too bad the same isn't true for Substance Designer and Houdini.

-

1

1

-

-

Man this Corteks guy sure has a dour view of the future...

-

Somebody flogging off their review copy? It's got the 3 games included...

-

3 hours ago, AbnRanger said:

After watching all those reviews, my previous assessment remains the same. FOR GPU RENDERING + OPENCL SIMULATIONS (Houdini), this is a BEAST and a heck of a value, when you consider the only thing close to it is a RTX 2080Ti...which costs $500 more. FOR A GAMING CARD....depends on whether you value the 16GB of Memory, for future-proofing purposes (knowing Game titles will only increase the RAM consumption more and more, as it becomes available on consumer cards), or the RTX that nobody uses yet, and may not, because there are software based solutions coming via DirectX and Vulkan.

If you want it, go get it...while you can.

You pushed me over the cliff ya son of a gun.

I just pulled the trigger on that Radeon 7. I think one of the deciding factors is that it never was meant to be a gaming card. It's a cut down version of the Radeon Instinct, a scientific research card that sells for more than $10,000.00. It's got all the high end error correction stuff taken out which isn't necessary for artists so it won't be competing with the Instinct in that arena. I think this is going to be one hell of a lookdev card for sure at the very least.

$1059.43 in Canadian pesos. Everybody else, even in the USA, was sold out except AMD themselves. Delivery in 3-4 business days. And that's with the 3 games for free; not my type of gaming but nonetheless the aftermarket producers don't seem to be offering that deal, only the FE.

ONE PER CUSTOMER

-

-

35 minutes ago, haikalle said:

@L'Ancien Regime Do you know what 2070 cards have the better memory chips?

Very good question. We're going to have to research this.

Just watched that Linus video review. He seems to think the Radeon VII might be the ideal card for content creators..

-

With GeForce RTX 2080 Founders Edition subjected to similar methodology, AMD's flagship ended up a full 10 dB(A) higher. What AMD asks of its cooler in order to match Nvidia's performance is not acceptable at the same price point.

Tom's Hardware

-

I was just reading WCCF Tech and hit refresh and this popped up fresh off the press

https://wccftech.com/amd-radeon-vii-worlds-first-gaming-7nm-graphics-card-review-roundup/

I'm not really sold on this; I'm thinking the RTX2070 if you can find it with Samsung VRAM instead of Micron is probably a better deal, especially if you buy two of them.

More troubling, we believe, is Radeon VII’s acoustic situation. Following in Nvidia’s footsteps, AMD retired its blower-style cooler in favor of one with axial fans. But rather than creating a quieter thermal solution able to keep Vega 20 cooler, Radeon VII is easily just as loud as the reference Radeon RX Vega 64 due to a fan curve that ramps up to 2,900 RPM under load. We approached AMD about Radeon VII’s noise because, frankly, it’s disappointing. The company explained that the card’s shipping configuration is tuned for enthusiasts, and that it’s working on other options that’d conceivably trade performance for better acoustics.

-

https://wccftech.com/amd-radeon-vii-graphics-card-allocation-report-paper-launch/

A very limited edition

Only 76 units have made its way to OverclockUK headquarters. The OCUK allocation is supposedly half of what the UK will receive on launch day. And that is not the worst.According to Cowcotland, Spain and France will get only 20 units each. It was not confirmed if the number came from one retailer or one manufacturer, but even if this meant 20 units from one manufacturer for one store the numbers are still unbelievably low.

The AMD Radeon VII will cost around 739 EUR in Europe. There is no information about availability in other regions, but early rumors indicated that only a few thousand units will be available worldwide at launch.

-

On 2/5/2019 at 9:57 AM, AbnRanger said:

That's why you pre-order? I don't think they will stop at 5000 if they sell out quickly. That may just be their low-ball estimate. Who knows.

They might not want to produce more if it's been produced at a loss and the executive that made the decision to put it into production has left AMD/been driven out.

-

I want to post this here because I'm on the threshold of pulling the trigger on my next rig (waiting for Radeon VII release and test results this week) and I'm thinking a lot about what is right to buy without going nuts/bankrupt on it. This is part of a longer conversation which I'll post, on GPU rendering with Redshift and Octane; the Entagma guys admire CPU rendering but it's becoming evident that it's just too slow. After their conversation on builds, one of the thread readers makes this comment, which I'd like to hold up for discussion;

Alex Fisher5 months ago

"I'm just gonna add something to the topic of having more gpus for lookdev with redshift specifically. As far as I know and have tested, when having data heavy scenes, it's actually better to have one gpu for rendering since the feedback time drops dramatically. I have tested a system with 8 GPUs and I can't stress enough how much longer it takes to load and refresh a scene with 8 GPUs. Since I've found this out last week, all that GPUs went into dedicated render rigs and I have 2 GPUs in my workstation, one for displaying stuff and one for rendering. Also, when using 1 frame per gpu rendering, you also gain farm speed since you optimise loading of scene files at different times. Love your videos guys. -

-

7 hours ago, AbnRanger said:

The AMD rep said that for Blender (I presume he is talking about Cycles and/or ProRender), the performance increase in Radeon VII is roughly 27% improvement. That would easily put it into 2080Ti territory or better, as the Vega64 already outperforms the 1080ti/2080.

I'm thinking that original issue of 5000 will be sold out within the first day or two.

AMD's CEO and CTO on Radeon VII, ray tracing and beyond

in CG & Hardware Discussion

Posted · Edited by L'Ancien Regime

And it looks like at least initially, the NAVI won't be rivaling the 7nm Vega architecture for high powered computing and desktop workstations. NAVI appears to be focused in the near future on gaming, specifically targeting the future consoles.

OTOH the new Ryzen X570 motherboards for Ryzen 3000 desktops will have PCIE gen 4.0

and

The 8 core models can sell for around $199-$299 while the 12 core parts can go for $399 and finally, the 16 core parts can end up around $499. The reason we will be looking at such good prices is that unlike Threadripper CPUs which use a bigger PCB and four dies (based on EPYC layout), the Ryzen CPUs will only be featuring 2 dies and that saves up space and design costs. Also, the 6 core and 4 core parts may end up under the $150-$200 US bracket which would make them an ideal choice for budget users.

https://wccftech.com/amd-ryzen-3000-cpus-x570-motherboards-and-radeon-navi-gpus-7nm-launch-rumor/

You can never be up to the moment very long in this technological race. At some point you just have to stop and say what do I need to do the job and then be happy with that.