-

Posts

2,189 -

Joined

-

Last visited

Content Type

Forums

Calendar

Gallery

Posts posted by L'Ancien Regime

-

-

Polygonal Modeling

SubD Modeling (Catmull-Clark and other variants)

NURBS Modeling

BREP Modeling

Voxel Modeling

And now Implicit Modeling which has been devised in response to numerous problems encountered with BREP Modeling

https://www.3dcadworld.com/implicit-modelling-for-complex-geometry/

The problem is the fundamental way that geometry is represented. All the major CAD programs use boundary representations (b-reps) to define solid geometry. B-reps use topology, such as vertices, edges and faces, which are defined by geometry such as points, curves and surfaces. For example, an edge is a bounded region of a curve and a face is a bounded region of a surface.

B-reps work fine for relatively simple geometry. Older CAD programs sometimes used Boolean operations to combine primitive objects such as cubes and spheres. B-reps are much more versatile, allowing profiles to be swept and lofted, solids to be shelled and so forth. However, when features are combined with fillets and blends, or large numbers of features are included, calculating the topology becomes exponentially more demanding on the computer. Many modelling operations involve combining simpler shapes with Boolean and blending operations. B-rep modelling has to calculate all of the new edges that are formed where faces intersect. For objects with planar faces the individual calculations are relatively simple, but the number of intersections increases by approximately the square of the number of faces. For intersections between curved surfaces the edges are complex splines, making the calculations considerably more complex. When faces are close to tangent, things get really difficult.

Another issue with B-reps can be determining which points in the model are inside the boundary – the solid material. The method is to shoot a ray from the point in an arbitrary direction. If the ray passes the boundary an odd number of times then the point is inside the boundary. If the ray passes the boundary an even number of times then the point is outside the boundary. Since floating point arithmetic is used, rounding errors may mistakenly count boundary crossings when the ray is close to the boundary. There are also ambiguities such as when a ray is tangent to a surface or passes through a vertex – it is not clear whether this counts as a boundary crossing, or as two crossings. Because of these issues, additional checks are required to ensure that b-rep modellers are robust. This makes them mathematically inefficient.

-

Now this is the kind of talk I like to hear.

-

Parametrics are becoming more and more dominant

-

VBS3 is basically the military version of Arma 3. Both have the same technology behind them, though VBS 3 can handle 1500 users in a simulation at a time, allowing vast military operations to be modelled on a specific landscape without burning millions of dollars in fuel etc. There are other differences; Arma 3 is better graphically. If you're going to have a max of 150 users in a WAN or LAN game instead of 1500 you can do that.

Thus I tend to watch VBS3 news as harbingers of what is to come for Arma3. VBS4 is coming out. And that means that after 6 years there will probably be an Arma 4 with an all new engine. Too bad you have to be a government of a country with a military to buy VBS.

This just got published 6 hours ago. Go to the 4 minute mark and watch. I just blew my mind. VBS Geo server has taken VBS4 global. And, like MS Flight Sim 2020 it does amazing things with generating landscapes on the fly.

https://bisimulations.com/products/vbs3

Oh and if you're an Arma 3 fan like me you'll definitely want the latest upgrade to HAL (Hetman Artificial Leader). I downloaded and installed it last night. It was already being abandoned by its creator, Rydygier two years ago when I left off playing computer games; he simply didn't have the time. It's a powerful script that has been greatly improving large scale battlefield scenarios since previous versions of Arma.

It's been taken up by NinjaRider600 and he's published it as NR6. It needs a PDF but the addition of all sorts of new nodes for creating AI led battles is really excellent.

This is a a modified/updated version of HET MAN - HAL by Rydygier which includes various changes/upgrades concerning battlefield immersion, new features and compatibility with dedicated server. The functionality of the script itself is expanded and upgraded but works almost the same way as the original HAL does. For a detailed description of the original system by Rydygier, you can find his here. The original scope I had for this project was to add some immersion with better player to AI commander interactions but it turned out to be something vastly more complex. The intended NR6 HAL experience contained in the NR6 Pack, my main project which can be found above the introduction. There you will find tons of new utilities and scripts that expand the battlefield with the intention of making HAL an almost autonomous battlefield generator which would require as little mission making as possible.

"To acti vate HAL for one side, one of the units of that side must be named LeaderHQ. Essential also is the placement on the map of any object (for example, an empty trigger) named RydHQ_Obj1. The location is entirely your choice. Its position will designate a target point which the Artificial Commander will try to conquer at first (for example, a spot near the leader of the opposing side).

It's best to download it off Steam Workshop though. An effortless installation.

-

-

Varomix looks at Karma in H18

-

23 hours ago, Andrew Shpagin said:

Developing render does not take even an hour of my time. It is a separate developer (Vladimir - Carrots) that is like me in terms of speed and productivity. He does many things now, including nodal editor and GPU texturing speedup.

Well that's good news. Thanks.

-

I disagree. I don't want to see Andrew get sidetracked into wasting his time on building a render engine. At best he should either embed Radeon Pro Render into the 3D Coat workflow or make a really efficient plug in to render your 3D Coat sculpts effortlessly in EEVEE or Cycles in Blender. He's already got a partial implementation of Renderman into 3D Coat. He could just add on a more complete set of Renderman controls into the 3D Coat interface we already have. It's free, it's top quality. No need for more than that. Take a good look at Zbrush which has far more resources than Andrew for development; they're not wasting their limited resources over their BPR renderer. They've got a good plug in to Keyshot and they're letting Luxion do all the necessary specialized work to develop Keyshot. And nobody among the vast pool of Zbrush artists is complaining about that.

Jack of all trades, master of none.

-

1

1

-

-

1 hour ago, AbnRanger said:

You can usually find the top model (Enterprise) on eBay for around $300+...The extra buttons really does help immensely. I rarely have to reach for the keyboard, for shortcuts.

I imagine that when you go between different programs you can avoid having to get that fingertip memory so that you can remember the different key alignments for navigating in their workspace window with one of these.

-

wow $419. I want one though. Next purchase. Cintique 24" Pro with stand $3600 Cdn. I can jsut imagine using that with a spacemouse.

-

which model Spacemouse did you get?

-

12 hours ago, Innovine said:

Just a general feeling with 3d coat overall, but I never really feel I know what a tool is going to do until I start using it. Sometimes they will bulge out a ridiculous amount, some times there is no effect whatsoever. Some tools I have to crank up to really high Depth level just to see a difference, others I need to set to below 1% to get an acceptable calm response. I use a Cintiq and the pressure sensitivity commonly feels bad, either no response or overwhelming, and this differs from tool to tool. The tools are ok for what they do, I guess, but they don't feel like a reliable TOOL, like a hammer or a screwdriver. They feel like finicky, unstable, unpolished things which are liable to jump around in my hands and destroy my model as much as they help.

Does anyone else feel the same way? Would 3d-coat benefit from an improvement and polishing of how tools work, to make them feel more predictable and united in their responses? Can anything be done about the sculpting-in-shaving-foam feeling?

I used to feel that way with the Transpose Tool. Then one day I started to delve into it, exploring all its functionality and discovered that it's super powerful, precise, and amazing. 3D Coat takes some serious work to master, but not so much as Autodesk Alias which is really difficult to master. Guys that do surfacing are some real wonks.

-

Can't wait to see this project textured.

-

53 minutes ago, Rygaard said:

Is there a possibility to allow the surface mode of Sculpt Room to allow geometry to accept UVs?

This way we could open texture maps directly in geometry and have the opportunity to use Displacement maps to physically deform and apply the mesh surface. And so have compatibility with Sculpt Layers these displacement maps.

I say this because there are many techniques (one of these techniques would be using XYZ Texture) that could be performed using texture maps in geometry that would accept and have UVs.

For example, in Blender, users have access to Modifiers (non-destructive process) such as Displacement Modifier, Muti Resolution Modifier, Booleans Modifier, and many others. And most of these modifiers use UVs - texture maps.

ThanksThis would certainly shatter the barrier between scultpting and texturing, a welcome development. Another reason for me to make the shift over to Blender. Thanks.

-

1

1

-

-

2.82 Alpha is in the works.

-

For a bit more than 500 Euros I'd go for the RTX 2070 myself. It really depends on your render engine of choice. Everyone is going to Redshift and Octane etc for GPU rendering that use Nvidia proprietary CUDA. If you don't really want that Radeon cards can be a bargain.

-

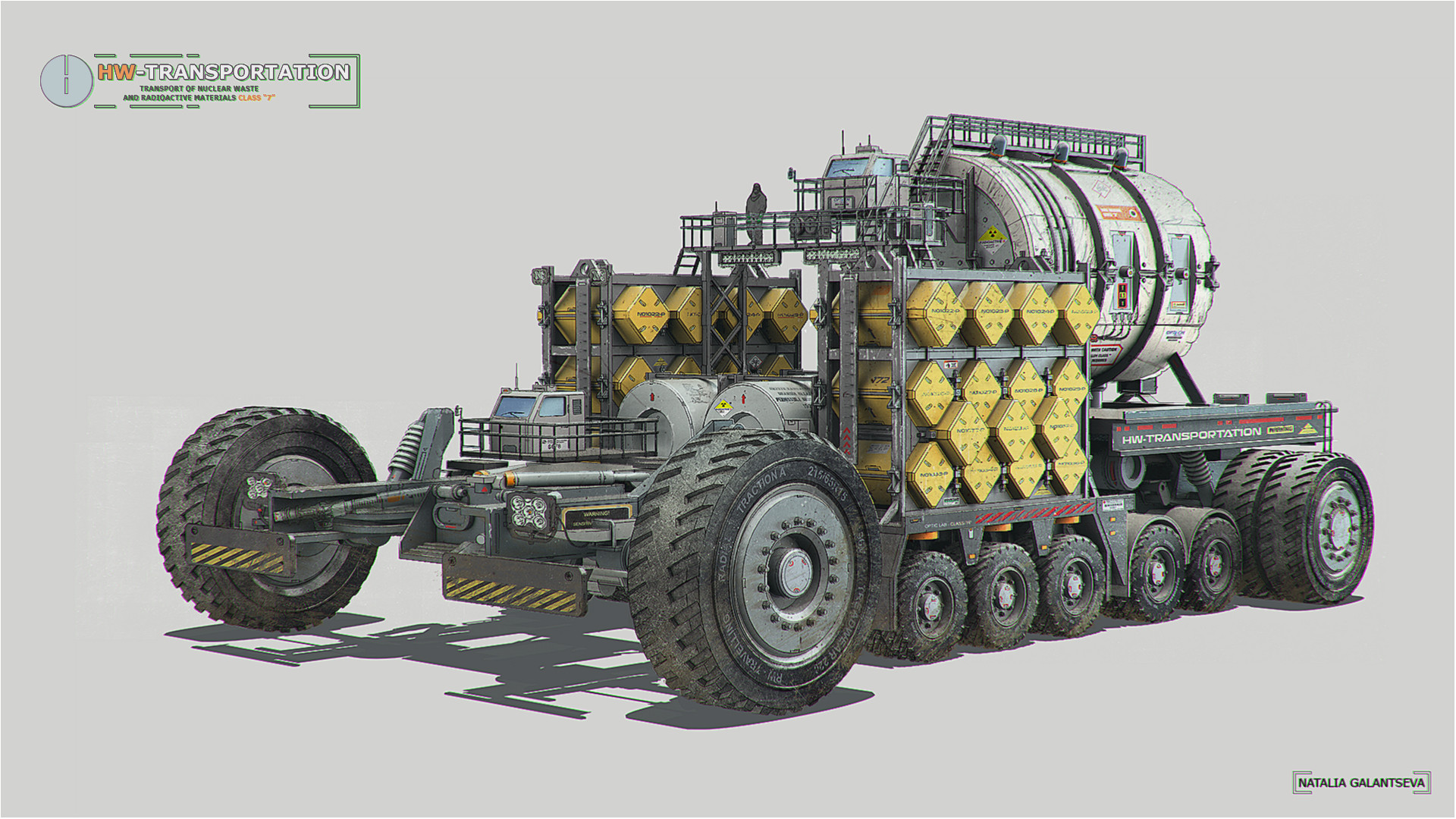

A pretty macho style for a girl...

-

Oct 24 update from MS; Weather

-

The CPU has been the heart of every PC since Intel’s x86 processors became popular over two decades ago. Yet the CPU does have weaknesses,the greatest of which is their relatively linear data execution. Graphics processors, by comparison, consist of many small cores that execute data simultaneously. This makes it easier for them to perform certain tasks, like video decoding and 3D graphics.

Both Intel and AMD know this, and have for some time. In response they’ve combined the strengths of both CPUs and GPUs, resulting in a new type of product called the APU.

What is an APU? The term APU stands for Accelerated Processing Unit. At the moment, this is a term that only AMD is using for its products. Intel’s recently released update to its processors also qualifies as an APU; Intel simply seems unwilling to use the definition. That’s understandable, since the company has been known as the world’s leading CPU maker for years.

An APU is simply a processor that combines CPU and GPU elements into a single architecture. The first APU products being shipped by AMD and Intel do this without much fuss by adding graphics processing cores into the processor architecture and letting them share a cache with the CPU. While both AMD and Intel are using their own GPU architectures in their new processors, the basic concepts and reasons behind the decision to bring a GPU into the architecture remain the same.

AMD and Intel wouldn’t go to the trouble of integrating a GPU into their CPU architectures if there weren’t some benefits to doing so, but sometimes the benefit of a new technology seems to be focused more on the company selling the product than the consumer. Fortunately, the benefits of the APU are dramatic and will be noticed by end users.

-

5 hours ago, Falconius said:

Not gonna lie. That dragon video is extremely cool.

I never really saw the 3d pens as all that practical though. Obviously I'm wrong.Wow, he goes wild on this one in large scale

-

I'm on the internet all the time and I've never seen this before...

-

-

Marvelous Designer is the ultimate (CLO3d is the commercial version for actually manufacturing clothes).

-

3dcoat ipad/mobile version

in CG & Hardware Discussion

Posted · Edited by L'Ancien Regime

I doubt it in the immediate future. There are SOC (system on a chip) that combine CPU and GPU on one chip that are out there and they're getting more powerful all the time. Eventually you'll probably see iPads that use them and can run 3D coat but then there's the problem of writing it for iPadOS not to mention power consumption issues . I wouldn't expect to see 3D Coat on an iPad for 3 years at the earliest if ever. OTOH you could install a Teradici card in your work station then download the Teradici remote desktop app to your iPad and then be able to log into your computer from anyplace with a decent low latency link and then turn your home/office workstation on and off, and use it's full graphical capacity to work with Maya, Houdini, 3dCoat or Zbrush remotely. This is the technology that Catia V6 uses for engineers to do 3D design and CAD/CAM work from widely distributed networks all over the world. They do enterprise level work that has tens of thousands of engineers and designers all working simultaneously on big projects as they travel the world. Works for PC gaming too with good frame rates and resolution.

https://www.teradici.com

You can get the PCIE X2 version on eBay if you shop around.

https://aecmag.com/software-mainmenu-32/994-teradici-workstation-access-software